In the previous post, we have seen the RTOS basics (Part 1). So this is the RTOS Advanced Tutorial which is a continuation of that part.

Table of Contents

RTOS Advanced Tutorial

Real-Time Operating Systems

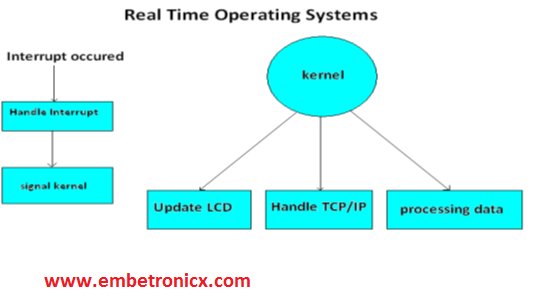

In Real-Time Operating Systems, each activity set as its own task which runs independently under the supervision of the kernel. For example in Fig 1.5, one task updates the screen, another task is handling the communications (TCP/IP), and another task is processing the data. All these three tasks run under the supervision of the kernel.

When an interrupt occurs from an external source, the interrupt handler handles that particular interrupt and passes the information to the appropriate task by making a call to the kernel.

Where do we opt for RTOS?

An RTOS is really needed to simplify the code and make it more robust. For example, if the system has to accept inputs from multiple sources and handle various outputs, and also doing some sort of calculations or processing an RTOS makes a lot of sense.

|

|

|

Advantages of using RTOS

- RTOS can run multiple independent activities.

- Support Complex communication Protocols (TCP/TI. I2c, CAN, USB, etc). These protocols come with RTOS as a library provided by the RTOS vendors.

- File System.

- GUI (Graphical User Interface).

RTOS Tracking Mechanisms

- Task Control Block (TCB)

- Track individual task status

- Device Control Block (DCB)

- Tracks the status of system associated devices

- Dispatcher/Scheduler

- The primary function is to determine which task executes next

Kernel

The heart of every operating system is called as ‘kernel’. Tasks are relieved of monitoring the hardware. It’s the responsibility of the kernel to manage and allocate the resources. As tasks cannot acquire CPU attention all the time, the kernel must also provide some more services. These include,

- Interrupt handling services

- Time services

- Device management services

- Memory management services

- Input-output services

The Kernel takes care of the task. It involves the following

- Creating a task

- Deleting a task

- Changing the priority of the task

- Changing the state of the task

Functioning of RTOS

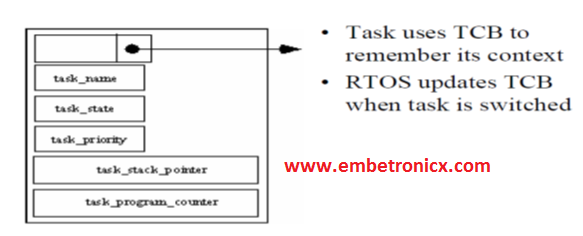

- Decides which task to be executed – task switching

- Maintains information about the state of each task – task context

- Maintains the task’s context in a block – called the task control block

Possible states of Tasks

- The task under execution – running state

- Tasks ready for execution – ready state

- Tasks waiting for an external event – Waiting state or blocked

- “The scheduler decides which task to run”

Basic elements of RTOS

- Scheduler

- Scheduling Points

- Context Switch Routine

- Definition of a Task

- Synchronization

- The mechanism for inter-task communication

Scheduler

- Decides which task in the ready state queue has to be moved to the running state

- The scheduler uses a data structure called the ready list to track the tasks in a ready state

Task Control Block (TCB)

It stores these details in it.

To schedule a task, three techniques are adopted.

|

|

|

- Co-operative scheduling: In this scheme, a task runs, until it completes its execution.

- Round Robin Scheduling: Each task is assigned a fixed time slot in this scheme. The task needs to complete its execution. Otherwise, the task may lose its flow, and data generated or it would have to wait for its next turn.

- Preemptive Scheduling: This scheduling scheme includes priority-dependent time allocation. Usually, in programs, the 256 priority level is generally used. Each task is assigned a unique priority level. While some systems may support more priority levels, multiple tasks may have the same priorities.

Idle Task

- An infinite wait loop

- executed when no tasks are ready

- Has a valid ID and the least priority

Context Switch

- Process of storing and restoring the state of a process or thread while changing one task to another. so that execution can be resumed from the same point at a later time.

- Context switching is the mechanism by which an OS can take a running process, save its state and bring another process into execution. It does this by saving the process “context” and restoring the context of the next process in line with the CPU.

Preemption Vs Context switch

- Preemption is when a process is taken off the CPU because a higher-priority process needs to run. Context switching is when the memory map and registers are changed.

- Context switching happens whenever the process changes, which may happen because of preemption, but also for other reasons: the process blocks, its quantum runs out, etc. Context switching also happens when a process makes a system call or an interrupt or fault is serviced.

- So preemption requires a context switch, but not all context switches are due to preemption.

Starvation

A task is starving when the scheduler gives no CPU time.

- This could occur

- when a high priority task is in an infinite loop

- a low priority task will be starved unless the OS terminates that task

- Other high priority tasks will round-robin with that task

Task Synchronization

- In a multi-tasking system, tasks can interact with one another

- directly

- indirectly through common resources

- These interactions must be coordinated or synchronized

- All tasks should be able to communicate with one another to synchronize their activities

- Mechanisms like Mutex(mutual exclusion), semaphores, message queues, and monitors are used for this purpose

- A mutex object can be in any one of two states: owned or free

Race Condition

Occurs when the result of two or more tasks are mutually inclusive. As we know all threads or tasks share the same address space. So, they have access to the same data and variables. When two or more threads try to update a global variable at the same time, their actions may overlap which can cause a system failure.

Example

- Consider tasks A and B, memory locations A1 and B1

- A race condition will arise, if A accessing A1 before B accesses B1 or if B accesses B1 before A accesses A1 causing a different result

Real Example

- Let us assume that two tasks T1 and T2 each want to increment the value of a global integer by one. Ideally, the following sequence of operations would take place:

Original Scenario

- Integer

i = 0; - T1 reads the value of

ifrom memory into a register : 0 - T1 increments the value of

iin the register: (register contents)+ 1 = 1 - T1 stores the value of the register in memory :

1 - T2 reads the value of

ifrom memory into a register :1 - T2 increments the value of

iin the register: (register contents) + 1 = 2 - T2 stores the value of the register in memory :

2 - Integer

i = 2

Race Condition Scenario

- Integer

i = 0; - T1 reads the value of

ifrom memory into a register :0 - T2 reads the value of

ifrom memory into a register :0 - T1 increments the value of

iin the register: (register contents)+ 1 = 1 - T2 increments the value of

iin the register: (register contents)+ 1 = 1 - T1 stores the value of the register in memory :

1 - T2 stores the value of the register in memory :

1 - Integer

i = 1 - The final value of

iis1instead of the expected result of2

Semaphore

The semaphore is a synchronization technique used in programming to protect shared resources (e.g., variables, data structures, memory segments, etc.) from access by multiple threads at the same time. One or more threads of execution can acquire or release a semaphore for the purposes of synchronization or mutual exclusion. A semaphore is similar to an integer variable, but its operations (increment and decrement) are guaranteed to be atomic. This means that multiple threads can increment or decrement the semaphore without interference. If the semaphore value is 0, then it is locked or it is not available for other tasks. If it has a positive vaules, then other tasks can aquire it.

A kernel can support many different types of semaphores, including

- Binary Semaphore,

- Counting Semaphore, and

- Mutual‐exclusion (Mutex) semaphores.

Binary Semaphore

- Similar to mutex

- Can have a value of 1 or 0

- Whenever a task asks for a semaphore, the OS checks if the semaphore’s value is 1

- If so, the call succeeds and the value is set to 0

- Else, the task is blocked

Binary semaphores are treated as global resources,

|

|

|

- They are shared among all tasks that need them.

- Making the semaphore a global resource allows any task to release it, even if the task did not initially acquire it?

Counting Semaphores

- Semaphores with an initial value greater than 1

- can give multiple tasks simultaneous access to a shared resource, unlike a mutex

- Priority inheritance, therefore, cannot be implemented

Mutexes

- Are powerful tools for synchronizing access to shared resources

- A mutual exclusion (mutex) semaphore is a special binary semaphore that supports

- ownership,

- recursive access,

- task deletion safety, and

- one or more protocols for avoiding problems inherent to mutual exclusion.

Mutex Problem

The problems that may arise with mutexes are,

-

- Deadlock

- priority Inversion

Deadlock

- Can occur whenever there is a circular dependency between tasks and resources

- For Example, consider two tasks A and B, each requiring two mutexes X and Y.

- Task A takes mutex X and waits for Y, task B takes mutex Y and waits for X.

- both tasks wait deadlocked

Priority Inversion

Priority inversion occurs when a higher priority task is blocked and is waiting for a resource being used by a lower priority task, which has itself been preempted by an unrelated medium‐ priority task. In this situation, the higher priority task’s priority level has effectively been inverted to the lower priority task’s level.

Two common protocols used for avoiding priority inversion include:

|

|

|

- Priority inheritance protocol

- Ceiling priority protocol

Apply to the task that owns the mutex.

Priority inheritance protocol

- It ensures that the priority level of the lower priority task that has acquired the mutex is raised to that of the higher priority task that has requested the mutex when inversion happens.

- The priority of the raised task is lowered to its original value after the task releases the mutex that the higher priority task requires.

Ceiling priority protocol

- It ensures that the priority level of the task that acquires the mutex is automatically set to the highest priority of all possible tasks that might request that mutex when it is first acquired until it is released.

Mutex vs Semaphore

Consider the standard producer-consumer problem. Assume, we have a buffer of 4096-byte length. A producer thread collects the data and writes it to the buffer. A consumer thread processes the collected data from the buffer. The objective is, both the threads should not run at the same time.

Using Mutex

- A mutex provides mutual exclusion, either producer or consumer can have the key (mutex) and proceed with their work. As long as the buffer is filled by the producer, the consumer needs to wait, and vice versa.

- At any point of time, only one thread can work with the entire common resource.

The concept can be generalized using semaphore.

Using Semaphore

A semaphore is a generalized mutex. In lieu of a single buffer, we can split the 4 KB buffer into four 1 KB buffers (identical resources). A semaphore can be associated with these four buffers. The consumer and producer can work on different buffers at the same time.

Misconception

- There is an ambiguity between binary semaphore and mutex. We might have come across that a mutex is a binary semaphore. But they are not! The purposes of mutex and semaphore are different. Maybe, due to similarity in their implementation a mutex would be referred to as a binary semaphore.

- Strictly speaking, a mutex is a locking mechanism used to synchronize access to a resource. Only one task (can be a thread or process based on OS abstraction) can acquire the mutex. It means there is ownership associated with a mutex, and only the owner can release the lock (mutex).

- Semaphore is a signaling mechanism (“I am done, you can carry on” kind of signal). For example, if you are listening to songs (assume it as one task) on your mobile and at the same time, your friend calls you, an interrupt is triggered upon which an interrupt service routine (ISR) signals the call processing task to wakeup.

Messaging

Messaging provides a means of communication with other systems and between the tasks. The messaging services include

|

|

|

- Semaphores

- Event flags

- Mailboxes

- Pipes

- Message queues

Semaphores are used to synchronize access to shared resources, such as common data areas. Event flags are used to synchronize the inter-task activities. Mailboxes, pipes, and message queues are used to send messages among tasks.

Pipe

- Communication channel used to send data between tasks

- Can be opened, closed, written to, and read from using file I/O functions in C

- Unlike a file, is unidirectional

- Has a source end and a destination end

- Source end task can only write to the pipe; the destination end task can only read from it

- Acts as a queue

- Depending on the pipe’s length, the task on the source end can write data into the pipe until the pipe fills up

- Acts like a FIFO

- A task attempting to write a full pipe or a task trying to read from an empty pipe will be blocked

Message Queue

- Allows transmission of arbitrary structures (messages) from task to task

- They are bi-directional;

- The tasks are blocked when they are trying to write to a full queue or read from an empty queue

- Some implementations support the notion of the message type

- When a task places a message in the queue, it can associate a type identifier with the message

- The task receiving the message can determine the nature of the message’s contents by examining the type field instead of the message

Interrupt Handling

- A decision on whether an ISR can be preempted for another task has to be made

- The certain OS does not allow non interrupt tasks to be scheduled during an ISR

- Certain OS preempt the ISR for higher priority task

We have posted the many RTOS tutorials for different microcontrollers. You can check this here.

You can also read the below tutorials.

Embedded Software | Firmware | Linux Devic Deriver | RTOS

Hi, I am a tech blogger and an Embedded Engineer. I am always eager to learn and explore tech-related concepts. And also, I wanted to share my knowledge with everyone in a more straightforward way with easy practical examples. I strongly believe that learning by doing is more powerful than just learning by reading. I love to do experiments. If you want to help or support me on my journey, consider sharing my articles, or Buy me a Coffee! Thank you for reading my blog! Happy learning!